Security issues and social engineering

Friday, April 28th, 2006A couple of articles about a CD handed out in London which users installed on their work computers and then a discussion of whether the workers or the technology were at fault for allowing the security breach ends up missing the point. Both are at fault.

Schneier asks how many employees need that access. We should ask instead why do they have that access? He starts to discuss that with an example of how he is not a heating system expert but he can still manage the temperature in his home, and reflects that “computers need to work more like that.”

Sort of.

Some computers need to work more like that. From a user’s point of view, a bank teller’s world should be very limited… there’s no legitimate need to install software. This control was once in place – it was called “mainframe access” and nobody could install software unless authorized. However, the operating systems in use today on teller machines are generic (usually Windows) and have opened up all kinds of security nightmares because of their advanced capabilities.

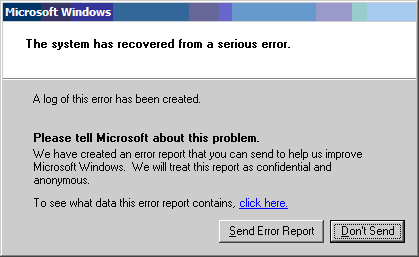

Have you ever tried locking down Windows? Really tightly? It’s impossible – something always breaks. Try to build a kid-proof interface and then imagine keeping reasonable adults within that as well. Making a specialized interface is possible – witness all the kiosks or ATMs that run Windows NT (and bluescreen in public view) – it is just hard and not cost-effective.

A user could be tremendously more effective and efficient if they only had access to what they needed, but you then must create that for each class of users… not only bank tellers, but bank accountants and bank loan officers and auto mechanics and on and on. Right now this isn’t cost effective, but an enterprising company could figure out a process to make this easier. After all, Tivo did.

Technorati Tags: tivo, security, windows, user+advocacy